LINUX入門:Linux下網卡bonding配置

《LINUX入門:Linux下網卡bonding配置》要點:

本文介紹了LINUX入門:Linux下網卡bonding配置,希望對您有用。如果有疑問,可以聯系我們。

一、bonding技術

bonding(綁定)是一種Linux系統下的網卡綁定技術,可以把服務器上n個物理網卡在系統內部抽象(綁定)成一個邏輯上的網卡,能夠提升網絡吞吐量、實現網絡冗余、負載等功效,有很多優勢.

bonding技術是Linux系統內核層面實現的,它是一個內核模塊(驅動).使用它必要系統有這個模塊, 我們可以modinfo命令查看下這個模塊的信息,?一般來說都支持.

# modinfo bonding filename: /lib/modules/2.6.32-642.1.1.el6.x86_64/kernel/drivers/net/bonding/bonding.ko author: Thomas Davis, tadavis@lbl.gov and many others description: Ethernet Channel Bonding Driver, v3.7.1 version: 3.7.1 license: GPL alias: rtnl-link-bond srcversion: F6C1815876DCB3094C27C71 depends: vermagic: 2.6.32-642.1.1.el6.x86_64 SMP mod_unload modversions parm: max_bonds:Max number of bonded devices (int) parm: tx_queues:Max number of transmit queues (default = 16) (int) parm: num_grat_arp:Number of peer notifications to send on failover event (alias of num_unsol_na) (int) parm: num_unsol_na:Number of peer notifications to send on failover event (alias of num_grat_arp) (int) parm: miimon:Link check interval in milliseconds (int) parm: updelay:Delay before considering link up, in milliseconds (int) parm: downdelay:Delay before considering link down, in milliseconds (int) parm: use_carrier:Use netif_carrier_ok (vs MII ioctls) in miimon; 0 for off, 1 for on (default) (int) parm: mode:Mode of operation; 0 for balance-rr, 1 for active-backup, 2 for balance-xor, 3 for broadcast, 4 for 802.3ad, 5 for balance-tlb, 6 for balance-alb (charp) parm: primary:Primary network device to use (charp) parm: primary_reselect:Reselect primary slave once it comes up; 0 for always (default), 1 for only if speed of primary is better, 2 for only on active slave failure (charp) parm: lacp_rate:LACPDU tx rate to request from 802.3ad partner; 0 for slow, 1 for fast (charp) parm: ad_select:803.ad aggregation selection logic; 0 for stable (default), 1 for bandwidth, 2 for count (charp) parm: min_links:Minimum number of available links before turning on carrier (int) parm: xmit_hash_policy:balance-xor and 802.3ad hashing method; 0 for layer 2 (default), 1 for layer 3+4, 2 for layer 2+3 (charp) parm: arp_interval:arp interval in milliseconds (int) parm: arp_ip_target:arp targets in n.n.n.n form (array of charp) parm: arp_validate:validate src/dst of ARP probes; 0 for none (default), 1 for active, 2 for backup, 3 for all (charp) parm: arp_all_targets:fail on any/all arp targets timeout; 0 for any (default), 1 for all (charp) parm: fail_over_mac:For active-backup, do not set all slaves to the same MAC; 0 for none (default), 1 for active, 2 for follow (charp) parm: all_slaves_active:Keep all frames received on an interface by setting active flag for all slaves; 0 for never (default), 1 for always. (int) parm: resend_igmp:Number of IGMP membership reports to send on link failure (int) parm: packets_per_slave:Packets to send per slave in balance-rr mode; 0 for a random slave, 1 packet per slave (default), >1 packets per slave. (int) parm: lp_interval:The number of seconds between instances where the bonding driver sends learning packets to each slaves peer switch. The default is 1. (uint)

bonding的七種事情模式:?

bonding技術提供了七種工作模式,在使用的時候必要指定一種,每種有各自的優缺點.

- balance-rr (mode=0) ? ? ? 默認, 有高可用 (容錯) 和負載均衡的功能, ?需要交換機的配置,每塊網卡輪詢發包 (流量分發比較均衡).

- active-backup (mode=1) ?只有高可用 (容錯) 功能, 不需要交換機配置, 這種模式只有一塊網卡工作, 對外只有一個mac地址.缺點是端口利用率比較低

- balance-xor (mode=2) ? ? 不常用

- broadcast (mode=3) ? ? ? ?不常用

- 802.3ad (mode=4) ? ? ? ? ?IEEE 802.3ad 動態鏈路聚合,需要交換機配置,沒用過

- balance-tlb (mode=5) ? ? ?不常用

- balance-alb (mode=6) ? ? 有高可用 ( 容錯 )和負載均衡的功能,不需要交換機配置 ?(流量分發到每個接口不是特別均衡)

具體的網上有很多資料,了解每種模式的特點根據本身的選擇就行, 一般會用到0、1、4、6這幾種模式.

二、CentOS7配置bonding

情況:

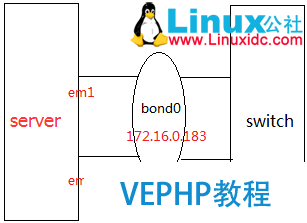

體系: Centos7 網卡: em1、em2 bond0:172.16.0.183 負載模式: mode6(adaptive load balancing)

服務器上兩張物理網卡em1和em2, 經由過程綁定成一個邏輯網卡bond0,bonding模式選擇mode6

注: ip地址配置在bond0上, 物理網卡不必要配置ip地址.

1、封閉和停止NetworkManager服務

systemctl stop NetworkManager.service # 停止NetworkManager服務 systemctl disable NetworkManager.service # 禁止開機啟動NetworkManager服務

ps: 必定要關閉,不關會對做bonding有干擾

2、加載bonding模塊

modprobe --first-time bonding

沒有提示說明加載成功, 如果出現modprobe: ERROR: could not insert 'bonding': Module already in kernel說明你已經加載了這個模塊, 就不消管了

你也可以使用lsmod | grep bonding查看模塊是否被加載

lsmod | grep bonding

bonding 136705 0

3、創立基于bond0接口的配置文件

改動成如下,根據你的情況:

DEVICE=bond0 TYPE=Bond IPADDR=172.16.0.183 NETMASK=255.255.255.0 GATEWAY=172.16.0.1 DNS1=114.114.114.114 USERCTL=no BOOTPROTO=none ONBOOT=yes BONDING_MASTER=yes BONDING_OPTS="mode=6 miimon=100"

上面的BONDING_OPTS="mode=6 miimon=100" 表現這里配置的工作模式是mode6(adaptive load balancing), miimon表現監視網絡鏈接的頻度 (毫秒), 我們設置的是100毫秒, 根據你的需求也可以指定mode成其它的負載模式.

4、改動em1接口的配置文件

vim /etc/sysconfig/network-scripts/ifcfg-em1

改動成如下:

DEVICE=em1 USERCTL=no ONBOOT=yes MASTER=bond0 # 必要和上面的ifcfg-bond0配置文件中的DEVICE的值對應 SLAVE=yes BOOTPROTO=none

5、修改em2接口的配置文件

vim /etc/sysconfig/network-scripts/ifcfg-em2

改動成如下:

DEVICE=em2 USERCTL=no ONBOOT=yes MASTER=bond0 # 必要和上的ifcfg-bond0配置文件中的DEVICE的值對應 SLAVE=yes BOOTPROTO=none

6、測試

重啟收集服務

systemctl restart network

查看bond0的接口狀態信息 ?( 如果報錯闡明沒做成功,很有可能是bond0接口沒起來)

# cat /proc/net/bonding/bond0 Bonding Mode: adaptive load balancing // 綁定模式: 當前是ald模式(mode 6), 也便是高可用和負載均衡模式 Primary Slave: None Currently Active Slave: em1 MII Status: up // 接口狀態: up(MII是Media Independent Interface簡稱, 接口的意思) MII Polling Interval (ms): 100 // 接口輪詢的時間隔(這里是100ms) Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: em1 // 備接口: em0 MII Status: up // 接口狀態: up(MII是Media Independent Interface簡稱, 接口的意思) Speed: 1000 Mbps // 端口的速率是1000 Mpbs Duplex: full // 全雙工 Link Failure Count: 0 // 鏈接失敗次數: 0 Permanent HW addr: 84:2b:2b:6a:76:d4 // 永久的MAC地址 Slave queue ID: 0 Slave Interface: em1 // 備接口: em1 MII Status: up // 接口狀態: up(MII是Media Independent Interface簡稱, 接口的意思) Speed: 1000 Mbps Duplex: full // 全雙工 Link Failure Count: 0 // 鏈接失敗次數: 0 Permanent HW addr: 84:2b:2b:6a:76:d5 // 永久的MAC地址 Slave queue ID: 0

通過ifconfig命令查看下網絡的接口信息

# ifconfig bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 inet 172.16.0.183 netmask 255.255.255.0 broadcast 172.16.0.255 inet6 fe80::862b:2bff:fe6a:76d4 prefixlen 64 scopeid 0x20<link> ether 84:2b:2b:6a:76:d4 txqueuelen 0 (Ethernet) RX packets 11183 bytes 1050708 (1.0 MiB) RX errors 0 dropped 5152 overruns 0 frame 0 TX packets 5329 bytes 452979 (442.3 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 em1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 84:2b:2b:6a:76:d4 txqueuelen 1000 (Ethernet) RX packets 3505 bytes 335210 (327.3 KiB) RX errors 0 dropped 1 overruns 0 frame 0 TX packets 2852 bytes 259910 (253.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 em2: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 84:2b:2b:6a:76:d5 txqueuelen 1000 (Ethernet) RX packets 5356 bytes 495583 (483.9 KiB) RX errors 0 dropped 4390 overruns 0 frame 0 TX packets 1546 bytes 110385 (107.7 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 17 bytes 2196 (2.1 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 17 bytes 2196 (2.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- 在本次mode=6模式下丟包1個, 恢復網絡時( 網絡插回去 ) 丟包在5-6個左右,說明高可用功能正常但恢復的時候丟包會比擬多

- 測試mode=1模式下丟包1個,恢復網絡時( 網線插回去 ) 基本上沒有丟包,說明高可用功能和恢復的時候都正常

- mode6這種負載模式除了故障恢復的時候有丟包之外其它都挺好的,如果能夠忽略這點的話可以這種模式;而mode1故障的切換和恢復都很快,基本沒丟包和延時.但端口利用率比擬低,因為這種主備的模式只有一張網卡在工作.

更多詳情見請繼續閱讀下一頁的精彩內容:

_baidu_page_break_tag_三、CentOS6配置bonding

Centos6配置bonding和上面的Cetons7做bonding基本一樣,只是配置有些分歧.?

系統: Centos6 網卡: em1、em2 bond0:172.16.0.183 負載模式: mode1(adaptive load balancing) # 這里的負載模式為1,也便是主備模式.

1、關閉和停止NetworkManager服務

service NetworkManager stop

chkconfig NetworkManager off

ps: 如果有裝的話關閉它,如果報錯說明沒有裝這個,那就不消管

2、加載bonding模塊

modprobe --first-time bonding

3、創建基于bond0接口的設置裝備擺設文件

vim /etc/sysconfig/network-scripts/ifcfg-bond0

修改如下 (根據你的必要):

DEVICE=bond0 TYPE=Bond BOOTPROTO=none ONBOOT=yes IPADDR=172.16.0.183 NETMASK=255.255.255.0 GATEWAY=172.16.0.1 DNS1=114.114.114.114 USERCTL=no BONDING_OPTS="mode=6 miimon=100"

4、加載bond0接口到內核

vi /etc/modprobe.d/bonding.conf

改動成如下:

alias bond0 bonding

5、編纂em1、em2的接口文件

vim /etc/sysconfig/network-scripts/ifcfg-em1

改動成如下:

DEVICE=em1 MASTER=bond0 SLAVE=yes USERCTL=no ONBOOT=yes BOOTPROTO=none

vim /etc/sysconfig/network-scripts/ifcfg-em2

改動成如下:

DEVICE=em2 MASTER=bond0 SLAVE=yes USERCTL=no ONBOOT=yes BOOTPROTO=none

6、加載模塊、重啟收集與測試

modprobe bonding

service network restart

查看bondo接口的狀態

cat /proc/net/bonding/bond0

Bonding Mode: fault-tolerance (active-backup) # bond0接口當前的負載模式是主備模式 Primary Slave: None Currently Active Slave: em2 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: em1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 2 Permanent HW addr: 84:2b:2b:6a:76:d4 Slave queue ID: 0 Slave Interface: em2 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 84:2b:2b:6a:76:d5 Slave queue ID: 0

通過ifconfig命令查看下接口的狀態,你會發現mode=1模式下所有的mac地址都是一致的,說明對外邏輯便是一個mac地址

ifconfig bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 inet6 fe80::862b:2bff:fe6a:76d4 prefixlen 64 scopeid 0x20<link> ether 84:2b:2b:6a:76:d4 txqueuelen 0 (Ethernet) RX packets 147436 bytes 14519215 (13.8 MiB) RX errors 0 dropped 70285 overruns 0 frame 0 TX packets 10344 bytes 970333 (947.5 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 em1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 84:2b:2b:6a:76:d4 txqueuelen 1000 (Ethernet) RX packets 63702 bytes 6302768 (6.0 MiB) RX errors 0 dropped 64285 overruns 0 frame 0 TX packets 344 bytes 35116 (34.2 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 em2: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 84:2b:2b:6a:76:d4 txqueuelen 1000 (Ethernet) RX packets 65658 bytes 6508173 (6.2 MiB) RX errors 0 dropped 6001 overruns 0 frame 0 TX packets 1708 bytes 187627 (183.2 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 31 bytes 3126 (3.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 31 bytes 3126 (3.0 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

進行高可用測試,拔掉此中的一條網線看丟包和延時情況, 然后在插回網線(模擬故障恢復),再看丟包和延時的情況.

本文永遠更新鏈接地址:

歡迎參與《LINUX入門:Linux下網卡bonding配置》討論,分享您的想法,維易PHP學院為您提供專業教程。

同類教程排行

- LINUX入門:CentOS 7卡在開機

- LINUX實戰:Ubuntu下muduo

- LINUX教程:Ubuntu 16.04

- LINUX教程:GitBook 使用入門

- LINUX實操:Ubuntu 16.04

- LINUX教學:Shell、Xterm、

- LINUX教程:Linux下開源的DDR

- LINUX實戰:TensorFlowSh

- LINUX教學:Debian 9 'St

- LINUX實戰:Ubuntu下使用Vis

- LINUX教學:Linux 下 Free

- LINUX教學:openslide-py

- LINUX實操:Kali Linux安裝

- LINUX教學:通過PuTTY進行端口映

- LINUX教程:Ubuntu 16.04